Exploring AI Agents: From Concept to Implementation

An agent architecture with how-to guidance and examples.

The internet is flooded with various AI agent solutions, making it challenging to choose the right one for your LLM (Large Language Model) applications. In this article, I'll share my understanding of how these solutions work and when to use them.

It's important to note that production-level LLM apps often differ significantly from open-source or side projects. In a production level, the quality of your AI-generated content directly impacts your reputation. If you build an application that doesn't provide value, users will likely abandon it.

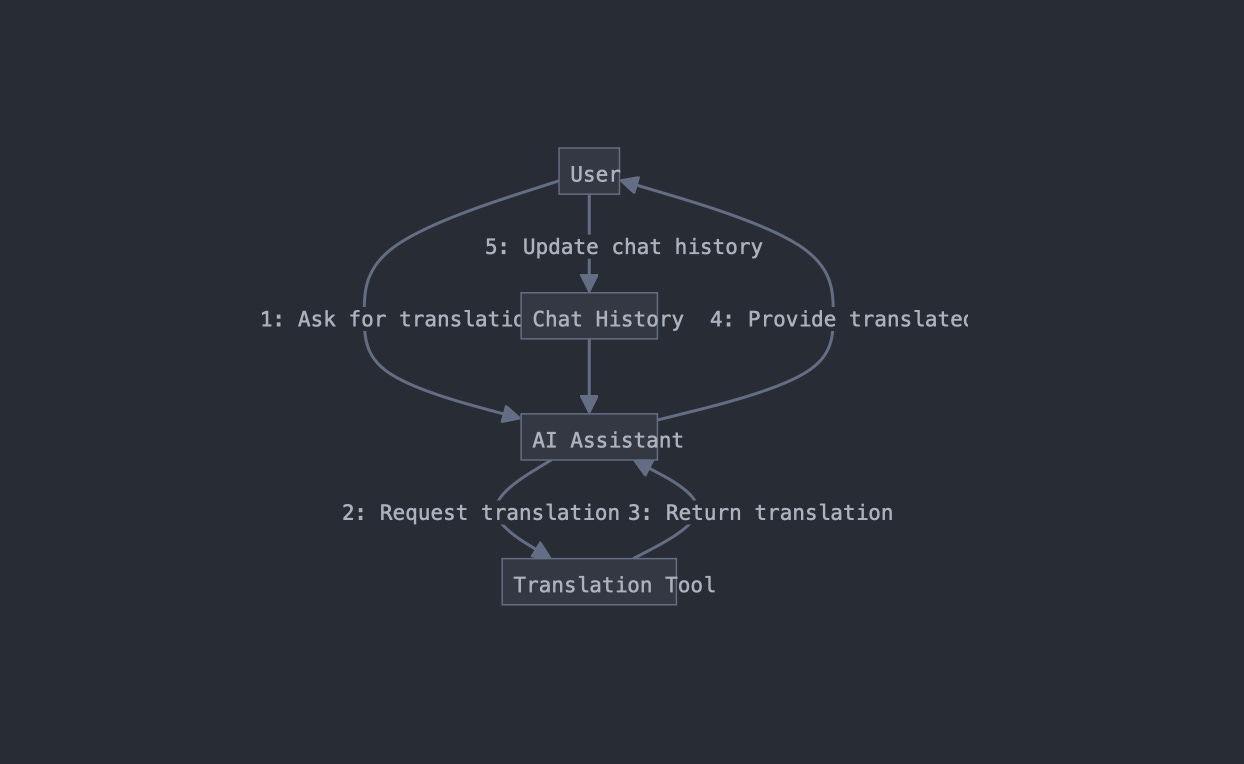

So, what exactly is an AI agent? While definitions vary, LlamaIndex (a popular indexing and querying solution for LLMs) provides a useful description. According to their documentation, an agent is a semi-autonomous software powered by an LLM, designed to complete specific tasks through a series of steps. Here's how it works:

The agent is given a task and a set of tools. These tools can range from simple functions to complex query engines.

For each step of the task, the agent selects the most appropriate tool.

After completing a step, the agent evaluates whether the overall task is finished.

If the task is complete, the agent returns the result to the user. If not, it loops back to start the next step.

This approach allows for flexible and powerful AI-driven problem-solving, but choosing the right agent solution for your specific needs requires careful consideration.

Multi-Agent Collaboration: LlamaIndex vs LangChain

Both LlamaIndex and LangChain offer powerful frameworks for multi-agent collaboration in AI systems. Let's explore how each approach works and compare their strengths and limitations.

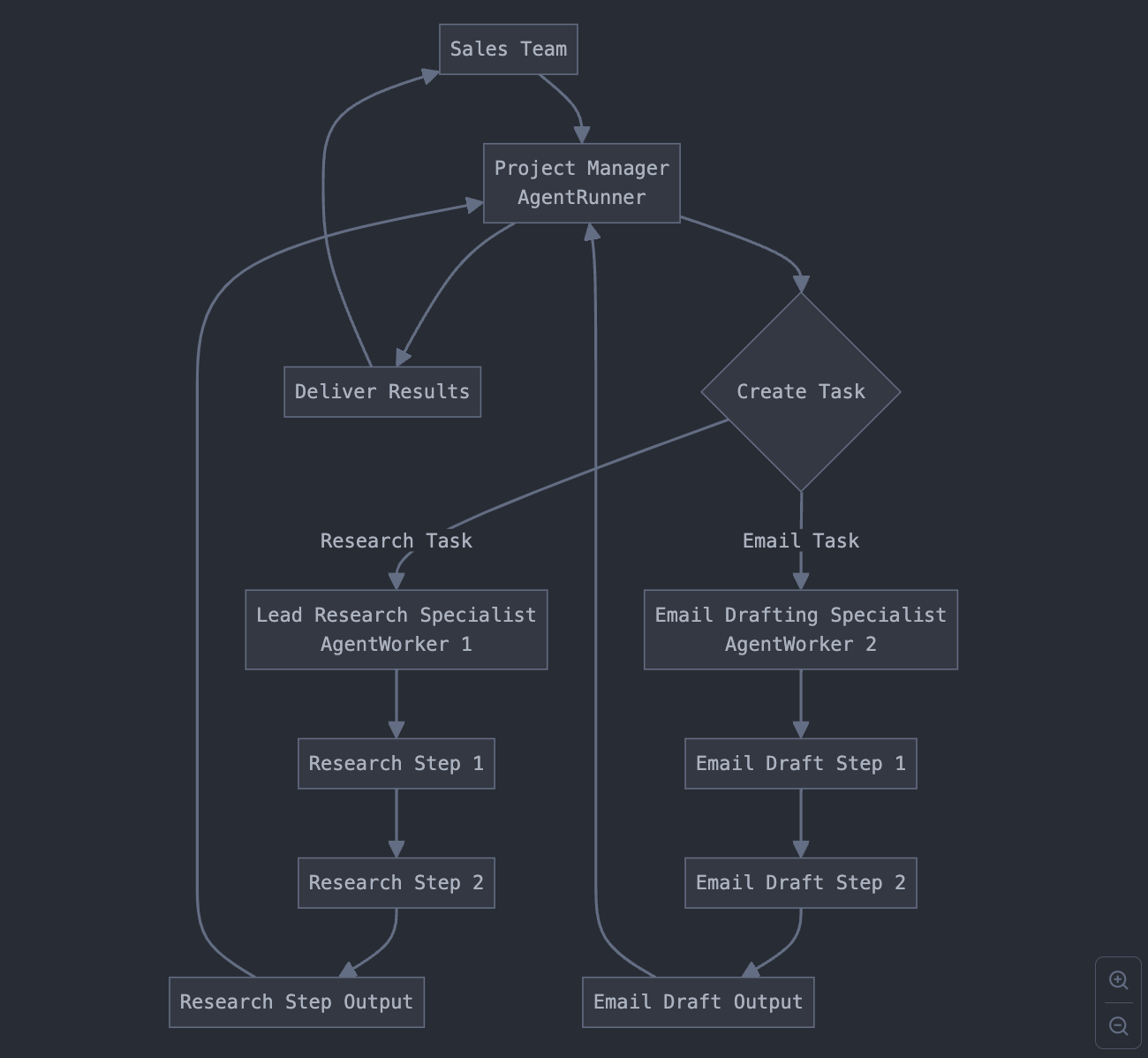

LlamaIndex Approach:

Architecture:

AgentRunner: Orchestrates the entire process, manages state, and interfaces with users.

AgentWorkers: Execute specific tasks step-by-step.

Supporting Elements: Tasks, TaskSteps, and TaskStepOutputs manage granular aspects of task progression.

Example: Sales Lead Management

Project Manager (AgentRunner) oversees the process.

Lead Research Specialist (AgentWorker) gathers information on potential clients.

Email Drafting Specialist (AgentWorker) creates personalized emails.

AgentRunner coordinates the workflow and delivers results to the sales team.

Pros:

Clear separation of task management and execution.

Centralized state management for better control and debugging.

Structured approach suitable for complex, multi-step processes.

Cons:

Potential complexity for non-technical users.

Possible slowdowns for simpler tasks due to multi-step breakdown.

Maintenance challenges with multiple interacting components.

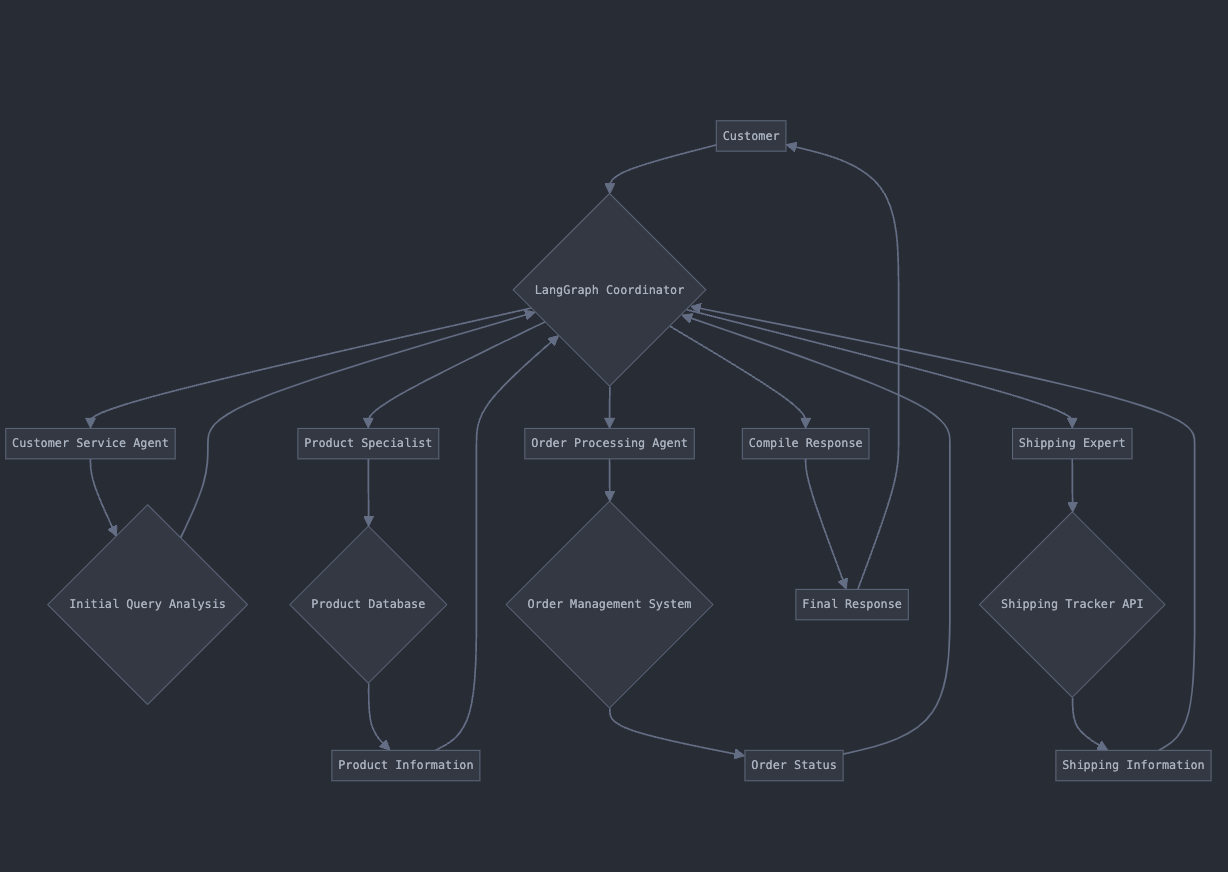

LangChain Approach:

Architecture:

Network of specialized agents coordinated through LangGraph.

Each agent is more independent and has role-specific capabilities.

Example: E-commerce Customer Service

Customer Service Agent coordinates the overall response.

Product Specialist, Order Processing Agent, and Shipping Expert handle specific aspects of the inquiry.

Agents collaborate through LangGraph to compile a comprehensive response.

Pros:

Dynamic agent interactions suitable for varied scenarios.

Independent agents can be easily added or modified.

Flexible workflow adaptable to changing requirements.

Cons:

Potential for overlapping responsibilities between agents.

May require more complex coordination for highly structured tasks.

Debugging could be challenging due to distributed decision-making.

Key Differences:

State Management: LlamaIndex centralizes state in AgentRunners, while LangChain distributes it across the agent network.

Task Execution: LlamaIndex uses step-wise execution controlled by AgentRunners, whereas LangChain agents work more independently on subtasks.

Flexibility vs Structure: LangChain offers more dynamic interactions, while LlamaIndex provides a more structured approach.

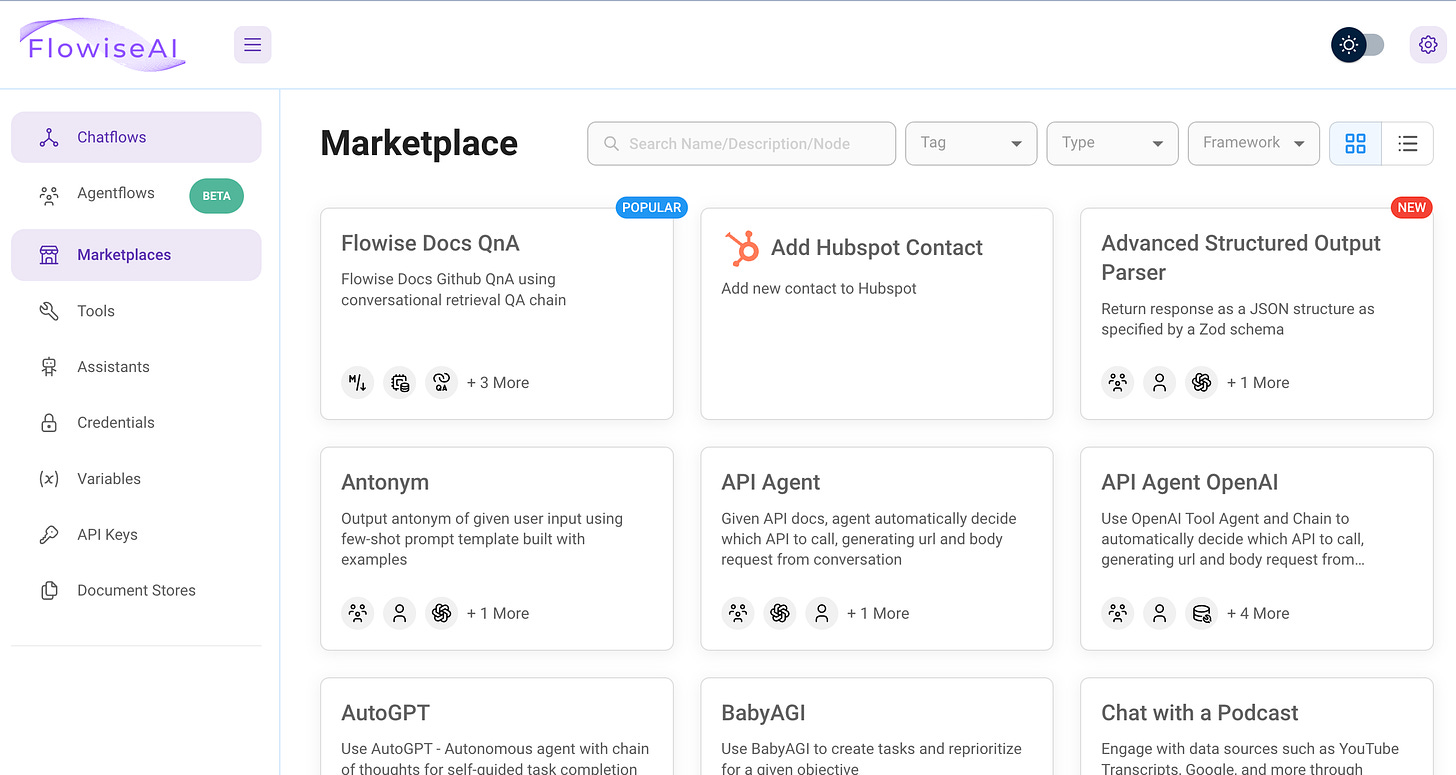

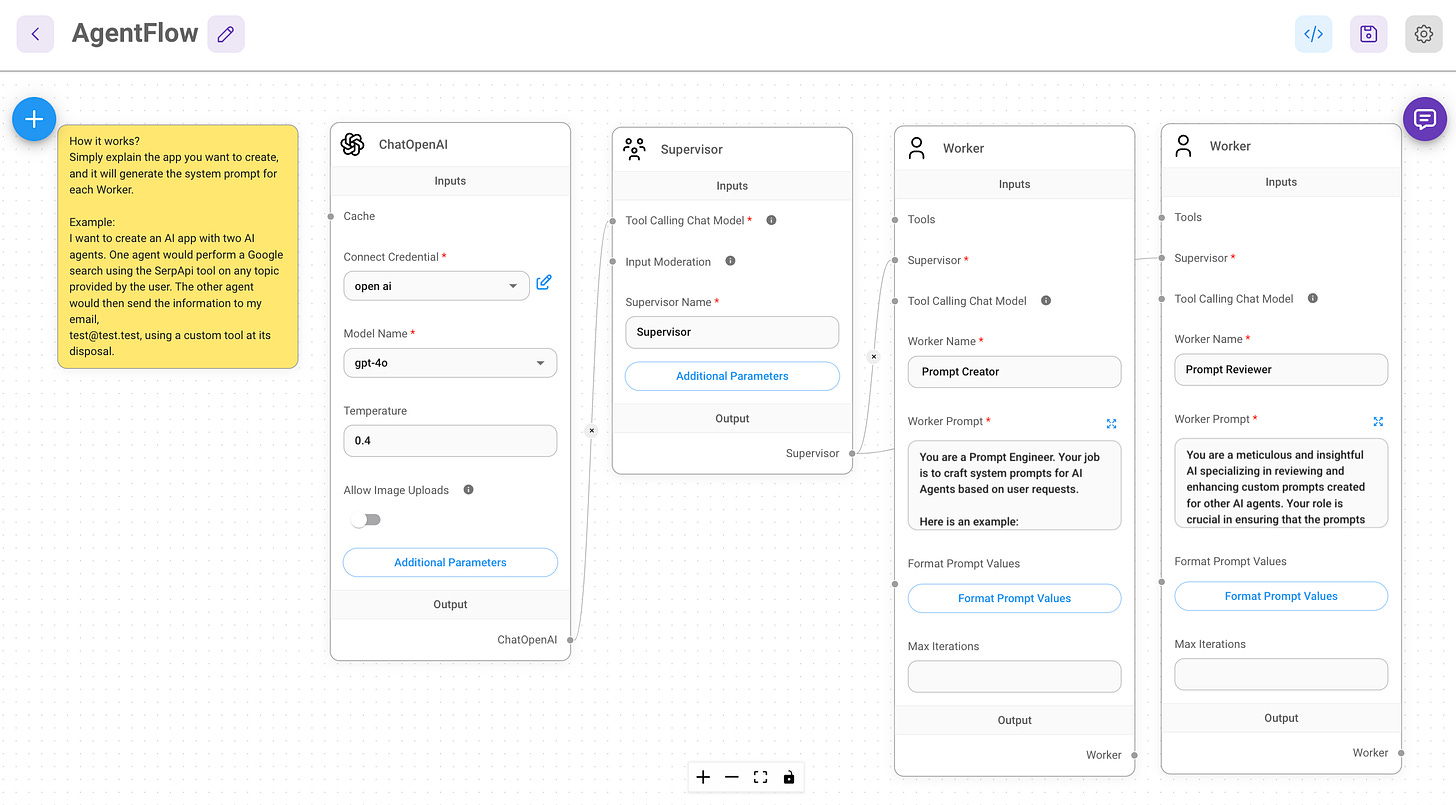

Experimenting with Flowise AI: A Practical Approach to Building AI Agents

Flowise offers a user-friendly platform for creating AI agents, bridging the gap between concept and implementation. Its visual interface and pre-made templates make it an excellent starting point for those new to AI agent development.

Key Advantages of Flowise:

Future-proofing: Easily scale from single agents to complex multi-agent systems as your projects grow.

Learning by Doing: Gain hands-on experience with AI concepts like embeddings and vector stores in a practical context.

Getting Started with Flowise:

Install Flowise using a tool called npm (Node Package Manager)

npm install -g flowiseStart Flowise with a simple command

npx flowise startOpen your web browser and go to a specific address

Open http://localhost:3000Exploring the Interface: Once loaded, you'll see a canvas where you can drag and drop components to build your AI agent workflow.

Using Templates:

Navigate to the "Templates" section.

Choose a template, such as "Basic Chatbot" or "Document Q&A".

Examine how the components are connected and configured.

Exploring Multi-Agent Setups:

Find a multi-agent template in the template library.

Study how multiple agents are coordinated.

Experiment with modifying worker prompts to understand their impact.

As you work with Flowise, you'll naturally encounter and learn about key AI concepts. Don't worry if terms like "embeddings" or "vector stores" seem unfamiliar at first – their meaning and importance will become clear as you build more complex agents.

Remember, the goal is to start building and experimenting. Each project will deepen your understanding of AI agents and their potential applications in real-world scenarios.

Choosing the Right Framework: LlamaIndex vs LangChain

When deciding between LlamaIndex and LangChain, consider these key factors:

Project Structure:

LlamaIndex: Best for well-defined, hierarchical processes.

LangChain: Suited for dynamic, adaptable workflows.

Control and Debugging:

LlamaIndex: Offers centralized control and easier debugging.

LangChain: Provides more flexibility but potentially complex debugging.

Scalability:

LlamaIndex: Scales well within defined structures.

LangChain: Excels in adding new agent types or capabilities.

Team Expertise:

LlamaIndex: Familiar to teams used to traditional architectures.

LangChain: Suits teams experienced with distributed systems.

Integration:

Consider alignment with your current tech stack.

Remember, multi-agent systems in production are still evolving. For alternative approaches, explore the concept of AI "chains" for more linear workflows in my previous article

Ultimately, your choice should be based on your project's specific needs, team expertise, and scalability requirements. Consider building small proof-of-concept projects with each framework to evaluate their fit for your use case.

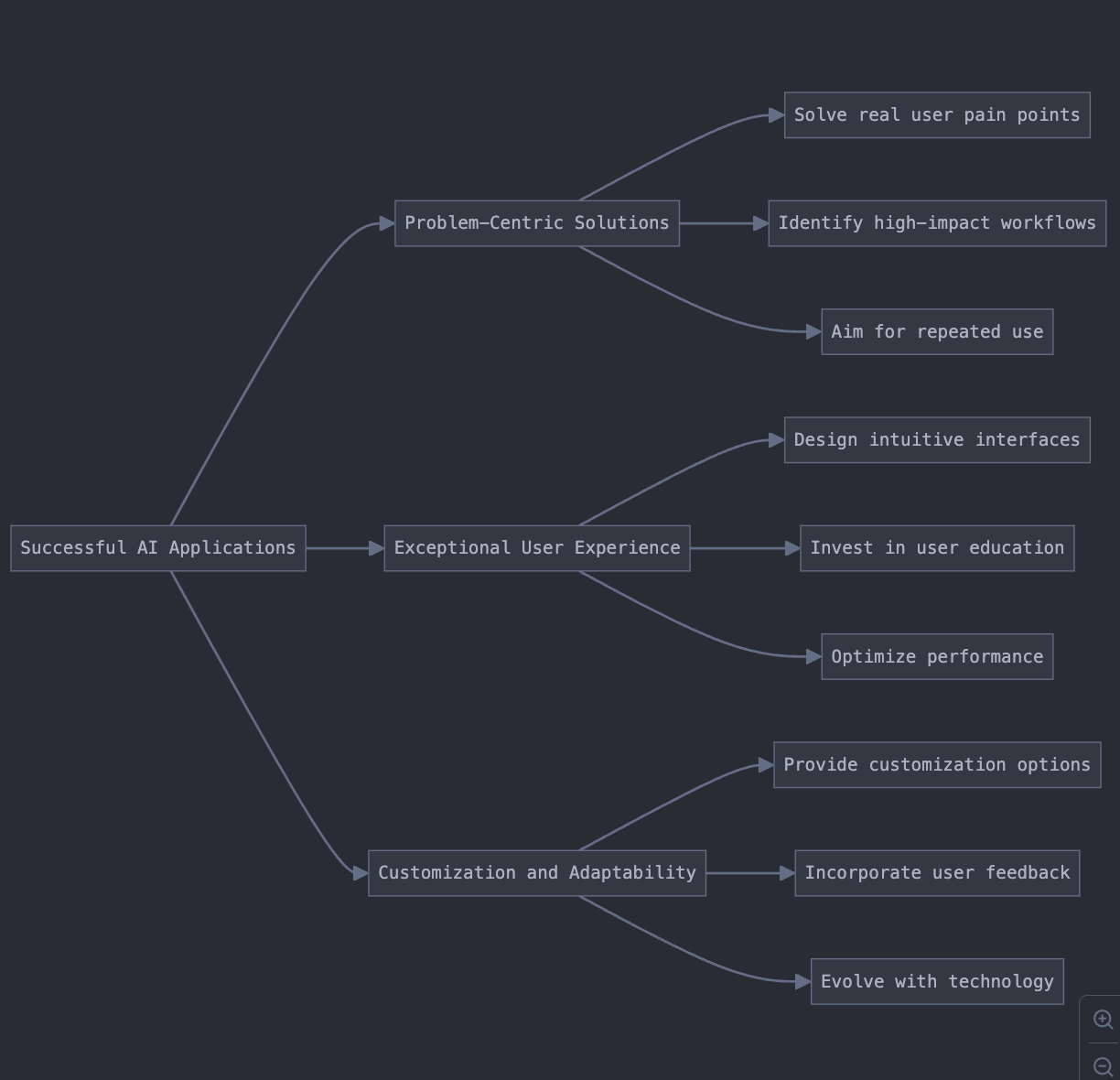

Final Thought

We are still in the early days of AI, and while models will significantly improve, familiarity with the technology will also grow. Despite the focus on technological advancements, the most successful applications are built on three fundamental pillars:

Problem-Centric Solutions:

Focus on solving real, significant user pain points.

Identify workflows where AI can provide substantial, measurable improvements.

Aim for applications with high potential impact and opportunities for repeated use.

Exceptional User Experience:

Design intuitive interfaces that make interacting with AI agents seamless and natural.

Invest in user onboarding and education to maximize the AI's utility.

Optimize response times and ensure consistency in output quality to maintain user engagement.

Customization and Adaptability:

Provide options for users to tailor the AI's behavior to their specific needs and preferences.

Continuously gather and incorporate user feedback to refine the AI agent's performance.

Stay flexible, ready to evolve your application as AI technology advances and user expectations change.

By focusing on these three pillars - solving real problems, delivering exceptional user experiences, and offering customization - you can create AI applications that not only leverage advanced technology but also provide lasting value to users.

Hi, I'm Kevin Wang!

By day, I'm a product manager diving into the latest tech innovations. In my free time, I'm the creator behind tulsk.io, a platform dedicated to PM Intelligence. My passion? Breaking down the complexities of web3 and AI into bite-sized, understandable concepts.

My goal is simple: to make cutting-edge technologies accessible to everyone. Whether you're looking to expand your knowledge or apply these insights to your personal growth, I'm here to guide you through the exciting worlds of web3 and AI.

Why Choose Curiosity Insights?

We offer:

Cutting-edge Analysis: Our team of experts dissects the latest developments in AI and Web3, providing you with actionable insights.

Strategic Advantage: Stay ahead of the curve and make informed decisions that drive innovation and growth.

Comprehensive Coverage: From investment opportunities to technological breakthroughs, we've got you covered.

Upgrade to Premium and Unleash the Power of Knowledge

Our Premium newsletter delivers:

Weekly In-Depth Research: Dive deep into AI and Web3 trends, curated and analyzed by industry experts.

Exclusive Expert Access: Gain valuable insights from thought leaders shaping the future of technology.

Full Archive Access: Explore our extensive knowledge base to identify emerging trends and technologies.

LlamaIndex and LangChain are two frameworks for building LLM applications. LlamaIndex focuses on RAG use cases, while LangChain has a broader range of applications.