Understanding Chains in AI Systems

Explore Practical Examples, Good and Bad of the Chain Process

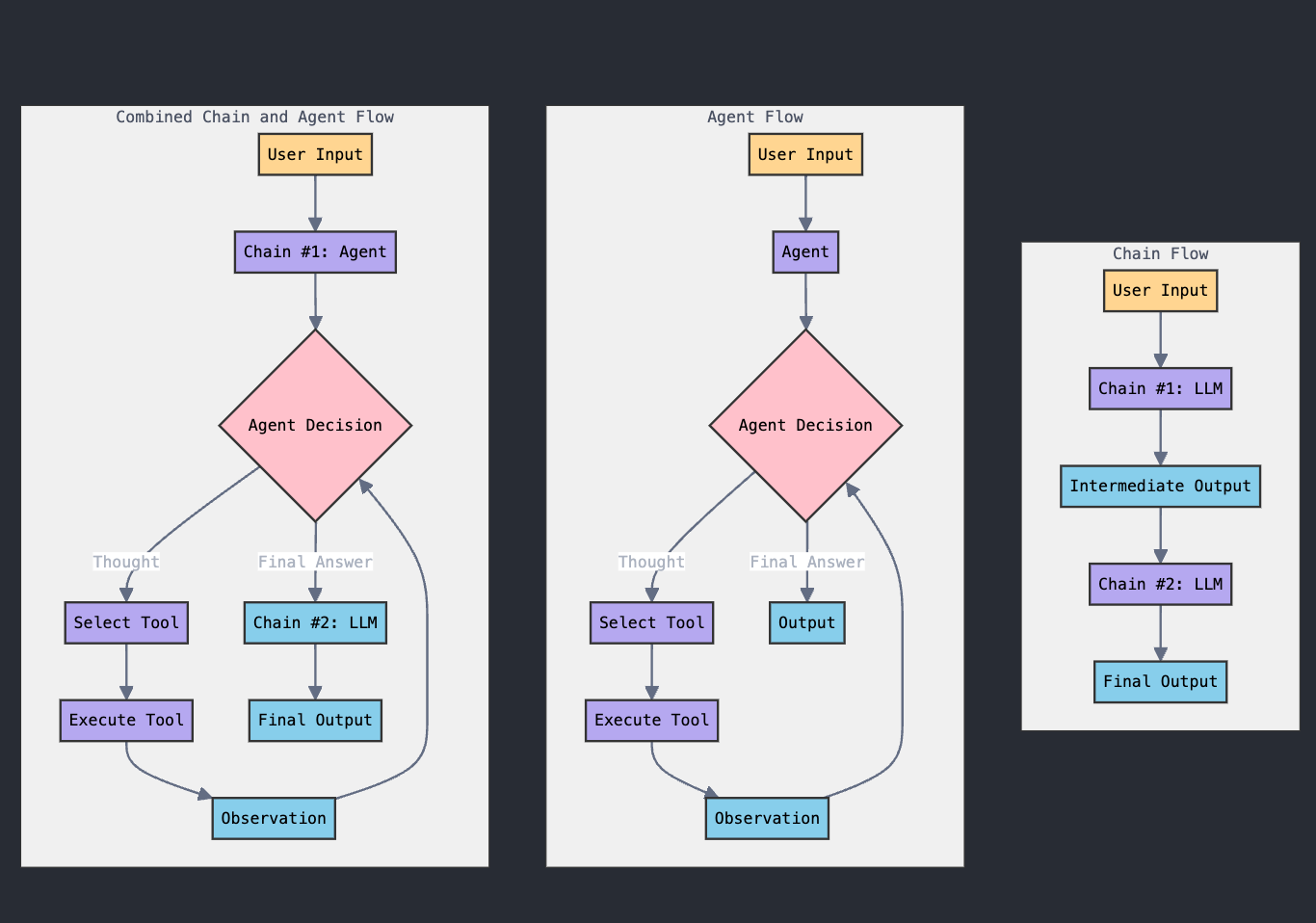

Building an LLM application requires thoughtful system design, particularly when choosing a framework. The right framework should fulfill technical needs, provide key features, meet performance goals, and integrate with current systems. To help navigate these choices, I'll be sharing a series of bite-sized posts over the coming weeks that explore two fundamental concepts in AI system design: chains and agents.

Our journey will unfold as follows:

Part 1: Understanding Chains in AI Systems (this article)

Part 2: Exploring AI Agents

Part 3: Integrating Chains and Agents

Part 4: Comparative Analysis: Chains vs. Agents

This series will provide insights into these crucial components, their integration, and how to choose the best approach for your specific use case. By breaking down these complex topics into digestible portions, we'll build a comprehensive understanding of LLM application design, empowering you to make informed decisions in your AI projects.

Why might an Agent Framework not work?

While agent frameworks that select and execute tools seem powerful, they may falter in certain situations. Building a trusted, production-grade LLM application goes beyond basic functionality. It demands precise, accurate, and consistent outputs to truly satisfy customers. The agent approach can struggle with complex tasks that challenge LLMs, potentially compromising reliability.

What is Chain?

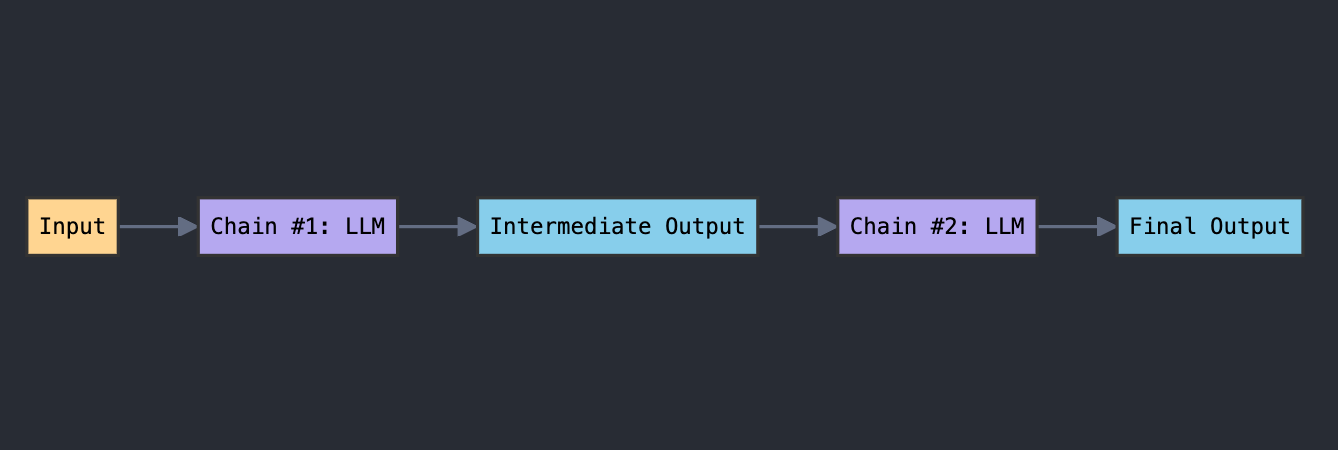

A simple chain flow, often referred to as a SimpleSequentialChain in LangChain, is a straightforward and linear process where multiple operations (usually involving language models) are executed in a predetermined sequence. Each step in the chain takes the output from the previous step as its input, processes it, and passes its output to the next step.

Let's break this down further:

Structure:

It consists of two or more "links" in the chain, typically language models or other processing components.

These links are arranged in a fixed order.

Data Flow:

Input enters the first link in the chain.

The output of each link becomes the input for the next link.

The final link produces the overall output of the chain.

Simplicity:

There's no branching or complex decision-making within the chain.

It follows a straightforward, predictable path from start to finish.

Use Cases:

Text summarization followed by translation

Question answering where one model interprets the question and another generates the answer

Content generation where one model creates an outline and another expands it into full text

Advantages:

Easy to understand and implement

Predictable behavior

Can combine specialized models for different tasks

Limitations:

Lack of flexibility for more complex workflows

No ability to handle conditional processing or loops

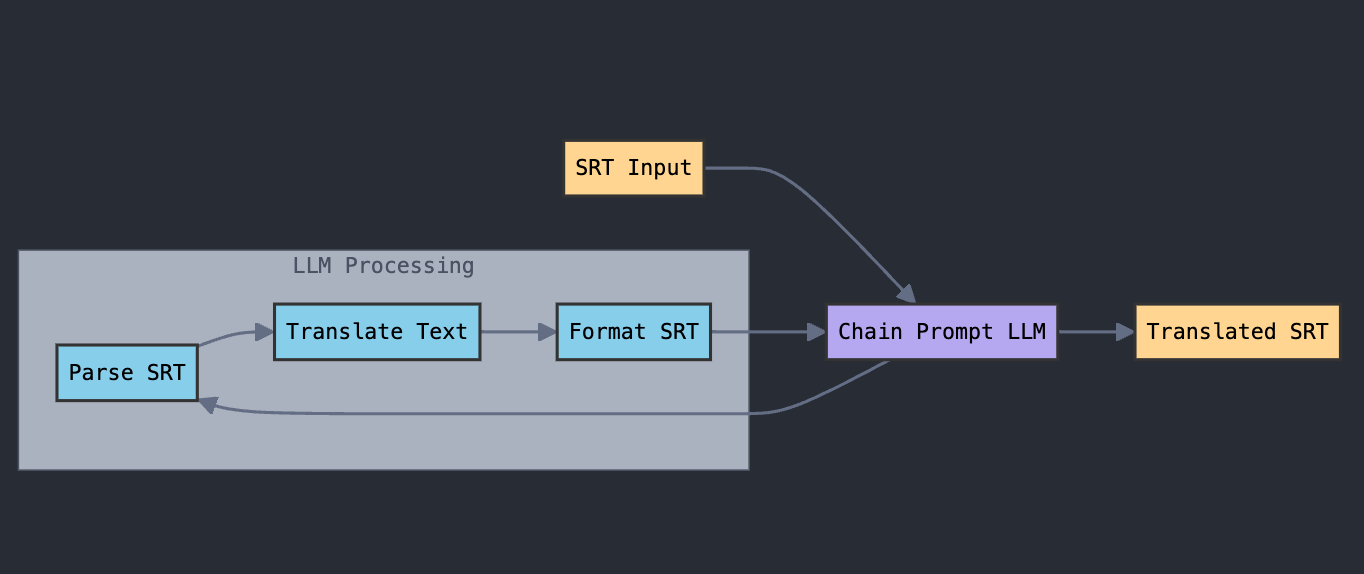

SRT Translator: A Chain-Based Approach

Introduction

To illustrate the practical application of chains in Large Language Models (LLMs), let's explore a real-world scenario: translating subtitles into multiple languages. This use case demonstrates how breaking down complex tasks into smaller, manageable steps can enhance efficiency and flexibility.

System Prompt

The core of our translation process is guided by a carefully crafted system prompt:

system prompt:

You are an expert subtitle translator. Your task is to translate the given SRT file content from its original language to {target_language}. Follow these steps:

1. Parse the SRT content and extract the text, ignoring timestamps and subtitle numbers.

2. Translate the extracted text to {target_language}.

3. Format the translated text back into proper SRT format, maintaining the original timing.The Chain-Based Approach

Rather than tackling the entire translation process in one go, we'll divide it into four distinct steps, each handled by a separate chain:

SRT Parsing

Input: Raw SRT content

Process: Validates the SRT format

Output: Confirmed, properly formatted SRT

Text Extraction

Input: Parsed SRT

Process: Extracts only the text content, removing timestamps and numbering

Output: Plain text content from the subtitles

Translation

Input: Extracted text and target language

Process: Translates the text to the specified language

Output: Translated text

SRT Formatting

Input: Translated text and original SRT

Process: Reformats the translated text back into SRT format, maintaining original timing

Output: Fully translated SRT file

Benefits of the Chain-Based Approach

Breaking down the process into these steps offers several advantages:

Modularity: Each step is independent and can be modified or replaced without affecting the others.

Reusability: Individual components (like the text extractor or translator) can be repurposed for other projects.

Debugging: Isolating specific parts of the process makes it easier to identify and resolve issues.

Flexibility: Additional steps (such as quality checking) can be easily integrated, or certain steps can be bypassed if necessary.

By leveraging this chain-based approach, we create a robust, adaptable system for translating SRT files, showcasing the power and versatility of LLMs in practical applications.

Further Explore: AI Systems, Automation, and Iterative Feedback

The distinction between chain-based and agent-based approaches in AI relates closely to the difference between Robotic Process Automation (RPA) and Agentic Process Automation, as discussed in my previous article. A key factor in the success of AI systems, particularly language models (LMs), is iterative feedback.

Grounding these models in real-world data through techniques like Ground-Truth-in-the-Loop and reinforcement learning significantly enhances their practicality and adaptability. While current methodologies primarily focus on pre-training and fine-tuning, an approach using small, modular components allows for more frequent updates. This strategy better aligns AI systems with real-world applications.

The ultimate goal of this process is to make LMs more user-friendly and applicable. However, it's worth noting that at present, this approach still requires considerable effort from users. As we continue to develop these systems, effective data management and careful consideration of ethical implications remain crucial factors.

Hi, I'm Kevin Wang!

By day, I'm a product manager diving into the latest tech innovations. In my free time, I'm the creator behind tulsk.io, a platform dedicated to PM Intelligence. My passion? Breaking down the complexities of web3 and AI into bite-sized, understandable concepts.

My goal is simple: to make cutting-edge technologies accessible to everyone. Whether you're looking to expand your knowledge or apply these insights to your personal growth, I'm here to guide you through the exciting worlds of web3 and AI.

Why Choose Curiosity Insights?

We offer:

Cutting-edge Analysis: Our team of experts dissects the latest developments in AI and Web3, providing you with actionable insights.

Strategic Advantage: Stay ahead of the curve and make informed decisions that drive innovation and growth.

Comprehensive Coverage: From investment opportunities to technological breakthroughs, we've got you covered.

Upgrade to Premium and Unleash the Power of Knowledge

Our Premium newsletter delivers:

Weekly In-Depth Research: Dive deep into AI and Web3 trends, curated and analyzed by industry experts.

Exclusive Expert Access: Gain valuable insights from thought leaders shaping the future of technology.

Full Archive Access: Explore our extensive knowledge base to identify emerging trends and technologies.

Don't just witness the future—shape it. Join Curiosity Insights today and transform your understanding of AI and Web3.