RPA vs. Agentic Process Automation

Integrating LLM-based Agents is crucial for your business. Here's what I've discovered.

Hello! Kevin Wang here. I'm a devoted product manager by day, but come evening, I'm the force behind a Gen-AI Tool - tulsk.io. My mission is to simplify complex concepts in the web3 and AI worlds, making them easily accessible and adaptable for your knowledge and growth. Keen to dive deeper? Join the tulsk.io community. Your support is invaluable and ensures I can share insights for budding entrepreneurs like you. Let's journey together!

Robotic Process Automation (RPA) isn't a new concept, particularly since the previous generation of automation tools primarily consisted of no-code, rules-based engines. These tools were designed to facilitate highly reproducible workflows. For instance, screen scraping technologies, which emerged in the 1980s, played a pivotal role in the development of RPA, assisting business users in automating back-office workflows. Similarly, companies like Zapier, Workato, and Tray adopted a hybrid approach to Workflow Automation. They catered to business users and developers, effectively becoming the linchpin for data flow within organizations.

The automation landscape, however, is rapidly transforming. Recent advancements in AI are revolutionizing our work methods, and instilling new behaviors and expectations in enterprise-grade products. In both horizontal and vertical automation, product developers are now harnessing Large Language Models (LLMs) to create and automate tasks once considered too advanced or futuristic.

The Future: Autonomous Agents

The future of automation might resemble its past more than expected. Companies like Adept, Cognosys, and others are developing "Autonomous Agents" - AI-powered software bots that can autonomously complete tasks using natural language commands. These agents can create, complete, and reprioritize tasks with minimal human intervention.

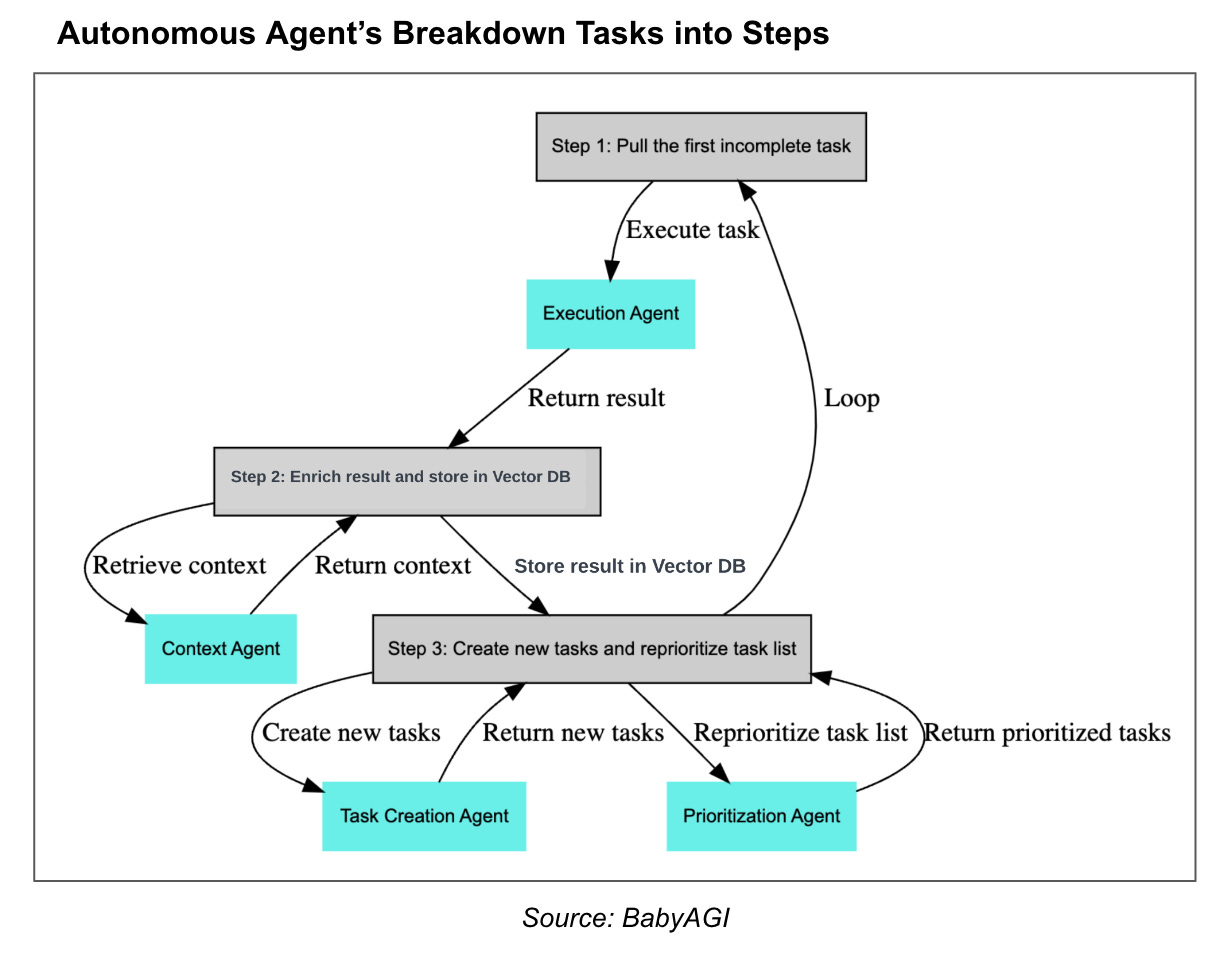

The concept of Autonomous Agents isn't new, dating back to the 1990s, but recent advancements in Large Language Models (LLMs) have reignited interest in this field. Projects like Auto-GPT and BabyAGI are leading a new wave of innovation in automation. These agents can be given access to specific tools or the entire internet, deciding on the best tool based on user input.

These agents function by dividing large tasks into smaller ones and using memory to guide their actions, offering a sophisticated approach to automation.

Agentic Process Automation

Building on the concept of "Autonomous Agents" and their evolution, a significant development in this field is the introduction of Agentic Process Automation (APA). This concept is explored in depth in a paper titled "PROAGENT: From Robotic Process Automation to Agentic Process Automation," which can be found on arXiv. APA represents a groundbreaking shift in automation, leveraging Large Language Models (LLMs) to create more advanced and autonomous systems.

What are the key components of APA?

Unlike traditional Robotic Process Automation (RPA) that relies on predefined rules and workflows, APA introduces a more dynamic and intelligent approach. It involves two key components:

Agentic Workflow Construction: LLM-based agents are used to construct workflows based on human instructions. These agents can identify parts of a process that require dynamic decision-making and orchestrate other agents into the workflow accordingly.

Agentic Workflow Execution: In this phase, the workflows are monitored and managed by agents. When a workflow reaches a point that requires dynamic decision-making, these agents intervene to handle the complexities.

Is AI a black box?

AI differs significantly from IT in terms of system transparency and problem-solving. IT systems are generally transparent, operating on a WYSIWYG (What You See Is What You Get) basis. Problems in IT systems can often be resolved easily or with moderate effort. In contrast, AI systems, especially those involving deep learning, can be more like black boxes. When an AI system encounters a problem, it often presents a complex or even an open-ended challenge that may not be as straightforward to resolve as in IT systems.

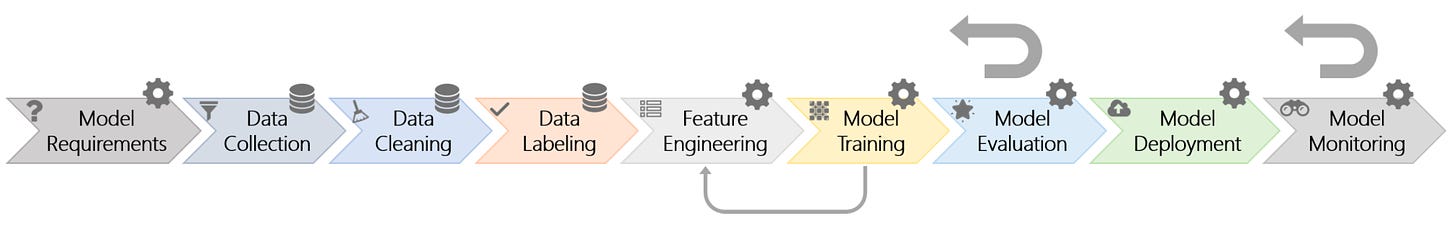

The complexity of AI is further highlighted by the intricate dependencies found in AI software engineering processes. This complexity is well-documented in works like "Software Engineering for Machine Learning: A Case Study" from ICSE 2019. Considering the impact of Language Models (LMs) on these processes, it appears that LMs have done little to simplify these complexities. For instance, observing how ChatGPT's behavior evolves over time underscores the necessity for AI systems to undergo continuous iterative improvements based on feedback, a process that remains a cornerstone in the development and refinement of AI technologies.

What are the Principles for Building Effective AI Agents?

Ground-Truth-in-the-Loop

Definition: This principle emphasizes the importance of incorporating reliable, real-world data into the training and operation of AI agents. It counters the limitations of self-generated training data and self-evaluation.

Example: In language models, augmenting training with data from knowledge graphs or search engines can improve accuracy and reduce hallucinations.

Handling Multi-Objective Optimization

Definition: This involves designing agents that can balance and optimize multiple objectives simultaneously. It recognizes that focusing on a single objective may not always yield the best overall outcomes.

Example: Autonomous vehicles must balance objectives like safety, speed, fuel efficiency, and passenger comfort. AI agents in these systems must make real-time decisions considering all these factors.

Employing Modularity

Definition: Modularity refers to building AI systems with specialized, independent modules that can work together. This approach allows for more manageable development and maintenance, and better adaptability.

Example: In robotic systems, different modules may handle specific tasks like navigation, object recognition, and manipulation. Each module is developed and improved independently but works in concert with others.

For a deeper understanding of these principles and their applications in AI, you can refer to the article "Agent: What, Why, How."

Conclusion

Iterative feedback is crucial for the success of AI systems, especially language models (LMs). Grounding these models in real-world data through Ground-Truth-in-the-Loop and reinforcement learning enhances their practicality and adaptability. While current methods focus on pre-training and fine-tuning, using small, modular components allows for more frequent updates and better alignment with real-world applications. This process aims to make LMs more user-friendly, though it currently requires significant effort from users. Effective data management and ethical considerations are also key in this development.

Reference:

🌟 Spread the Curiosity! 🌟

Enjoyed "Curiosity Ashes"? Share it to inspire innovation and knowledge!